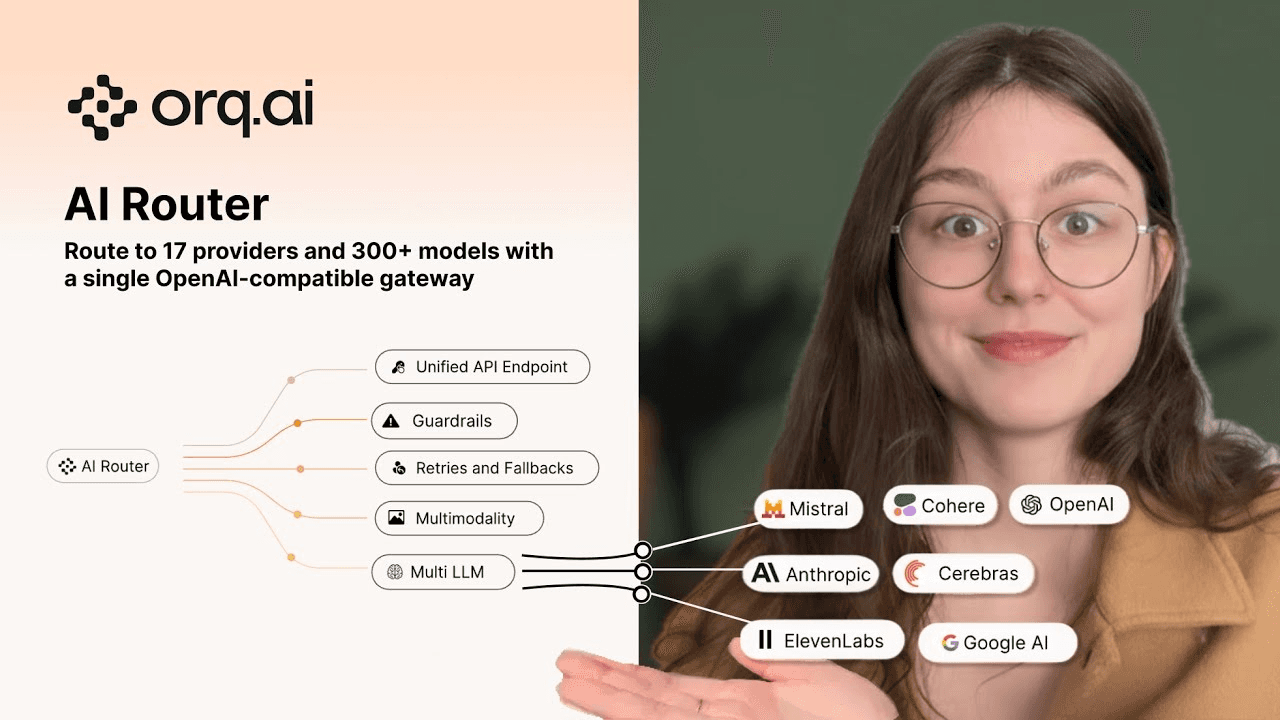

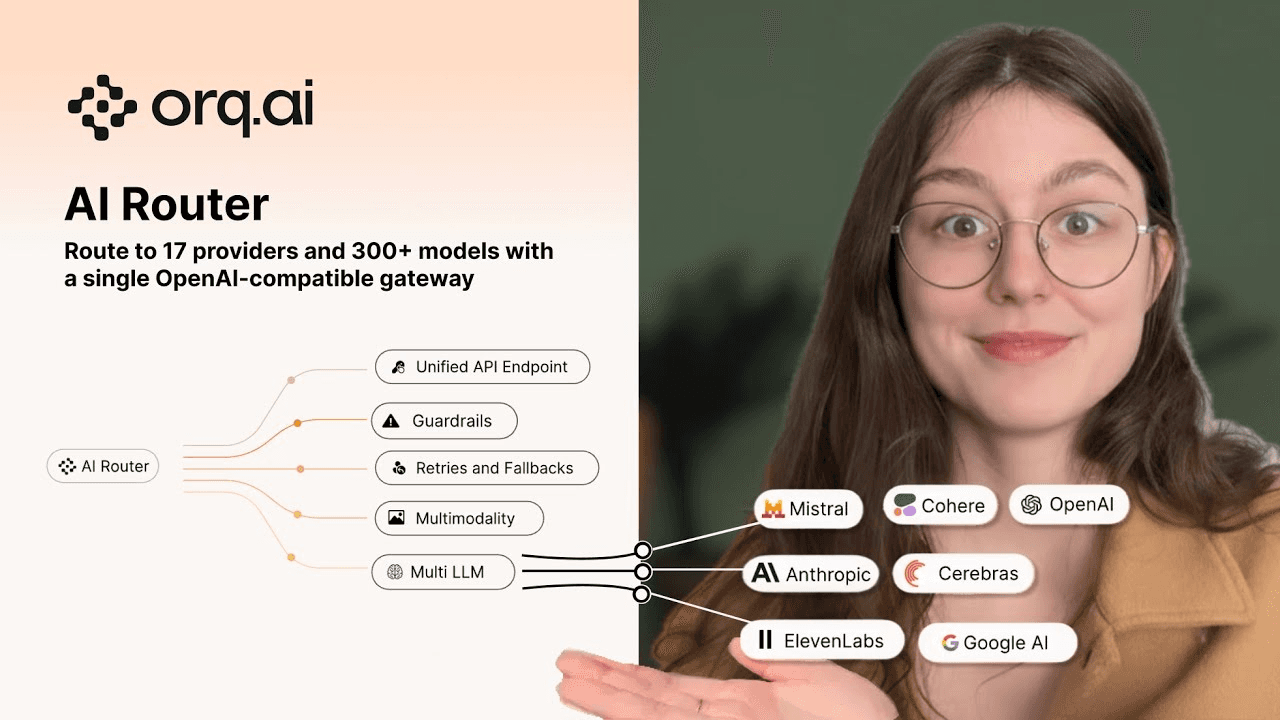

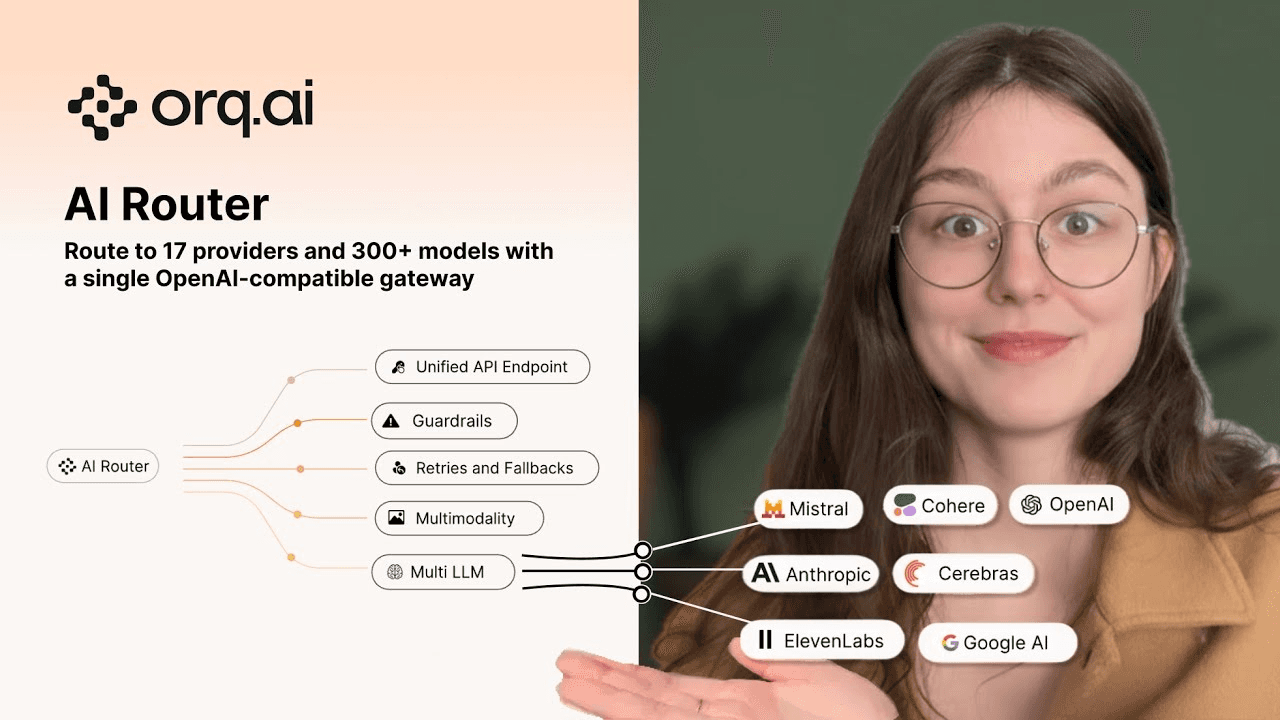

One API key for every LLM

Route all your AI traffic through a single, production-ready gateway. Swap models without rewrites. Stay in control as you scale.

One API key for every LLM

Route all your AI traffic through a single, production-ready gateway. Swap models without rewrites. Stay in control as you scale.

One API key for every LLM

Route all your AI traffic through a single, production-ready gateway. Swap models without rewrites. Stay in control as you scale.

Secure by design

European based

Trusted by 100+ AI teams already using single interface for all LLMs

Why teams start with AI router

Why teams start with AI router

Why teams start with AI router

Multi modality

Multi provider

Routing logic

bring your own keys

Route to any model

Route to 20+ providers and 300+ models with a single OpenAI-compatible gateway. Bring your own keys or models for full control.

Auto retry

Fallback logic

Reliability

100% uptime

// Cost-optimized: cheap → expensive

fallbacks: [{ model: "openai/gpt-3.5-turbo" }, { model: "openai/gpt-4o" }];

// Speed-optimized: fast → comprehensive

fallbacks: [

{ model: "openai/gpt-4o-mini" },

{ model: "anthropic/claude-3-haiku" },

];

// Reliability-optimized: different providers

fallbacks: [

{ model: "openai/gpt-4o" },

{ model: "anthropic/claude-3-sonnet" },

{ model: "azure/gpt-4o" },

];// Cost-optimized: cheap → expensive

fallbacks: [{ model: "openai/gpt-3.5-turbo" }, { model: "openai/gpt-4o" }];

// Speed-optimized: fast → comprehensive

fallbacks: [

{ model: "openai/gpt-4o-mini" },

{ model: "anthropic/claude-3-haiku" },

];

// Reliability-optimized: different providers

fallbacks: [

{ model: "openai/gpt-4o" },

{ model: "anthropic/claude-3-sonnet" },

{ model: "azure/gpt-4o" },

];// Cost-optimized: cheap → expensive

fallbacks: [{ model: "openai/gpt-3.5-turbo" }, { model: "openai/gpt-4o" }];

// Speed-optimized: fast → comprehensive

fallbacks: [

{ model: "openai/gpt-4o-mini" },

{ model: "anthropic/claude-3-haiku" },

];

// Reliability-optimized: different providers

fallbacks: [

{ model: "openai/gpt-4o" },

{ model: "anthropic/claude-3-sonnet" },

{ model: "azure/gpt-4o" },

];Higher availability

Built for production traffic with retries, timeouts, rate limits, and automatic failover. Your app keeps working even when models don’t.

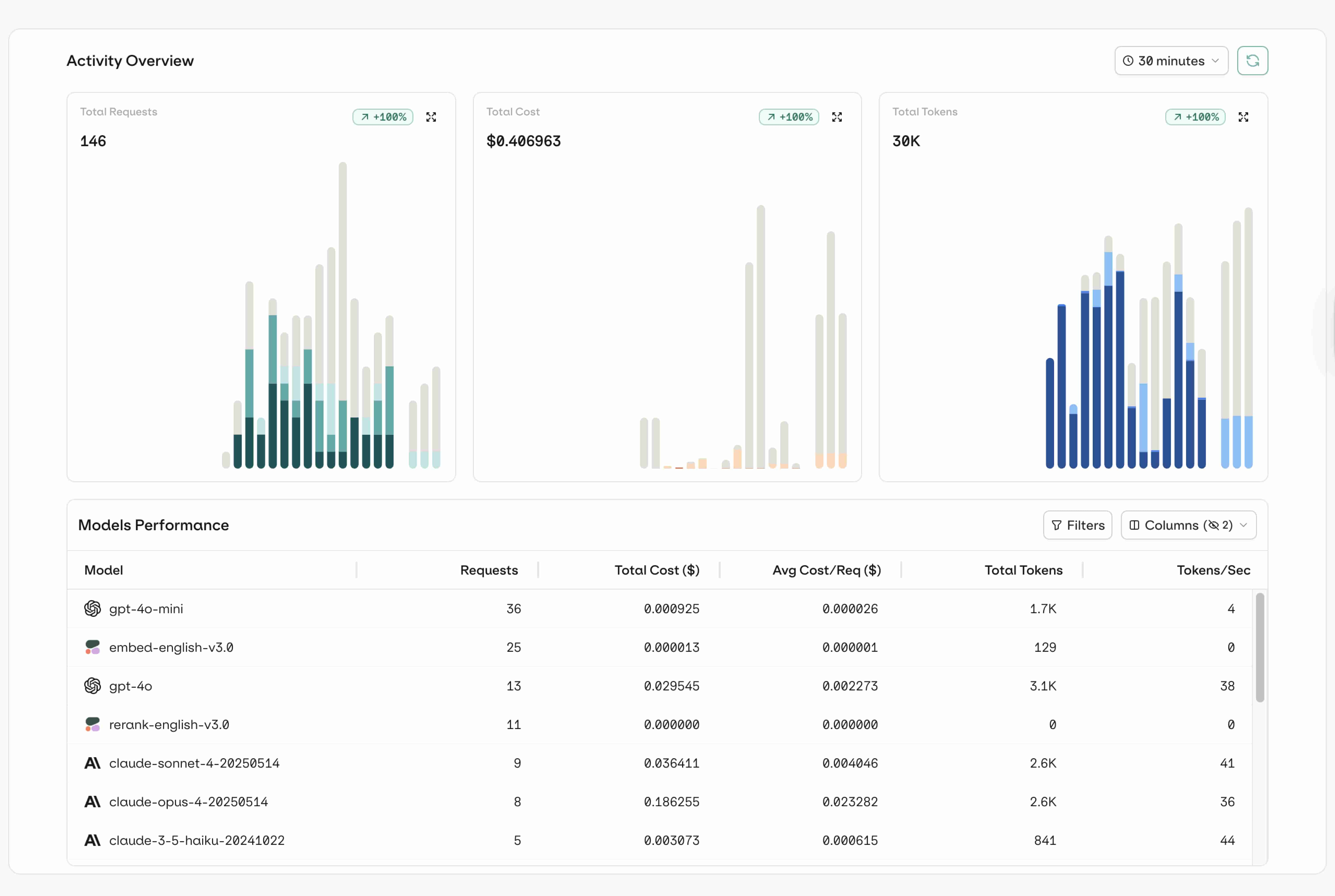

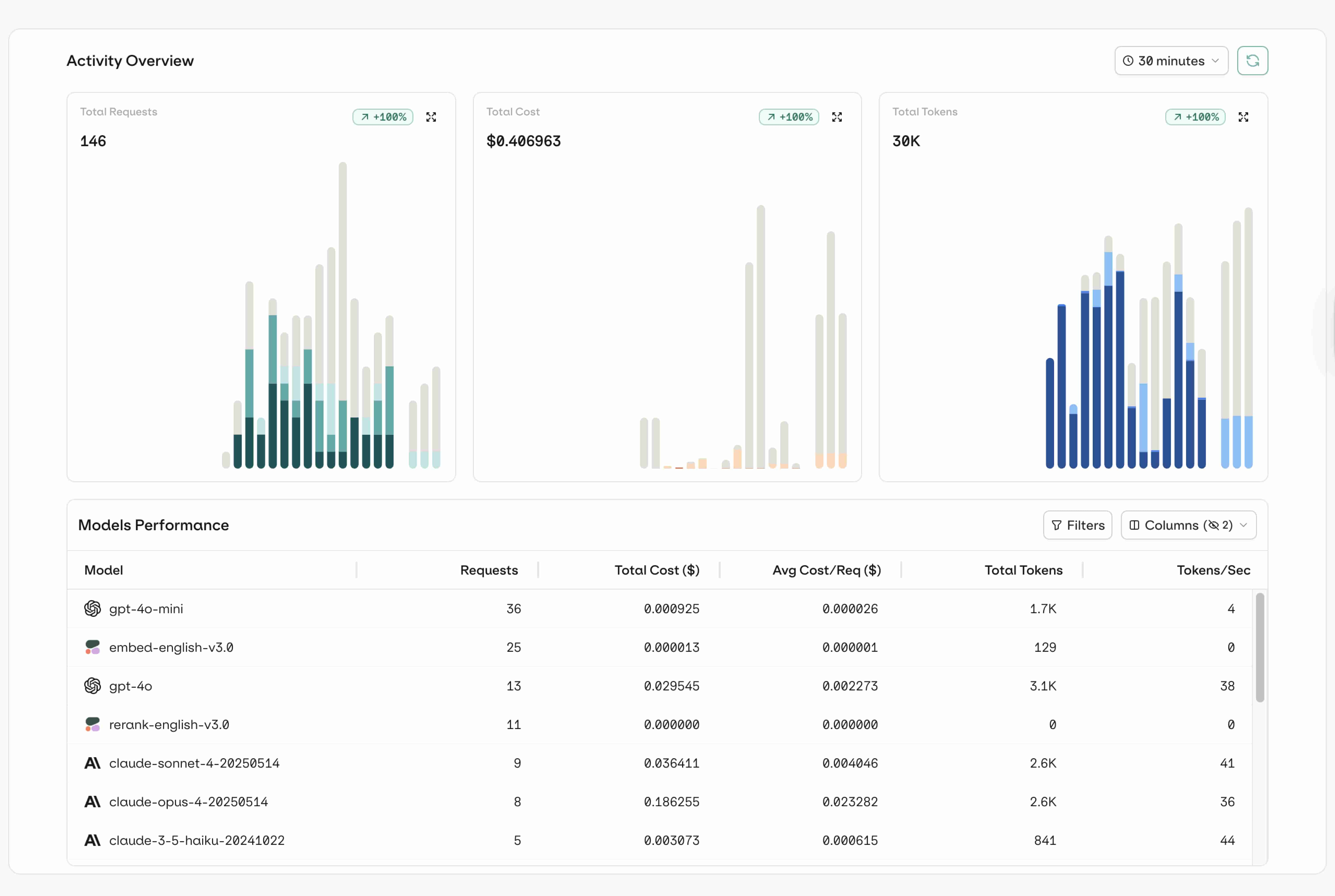

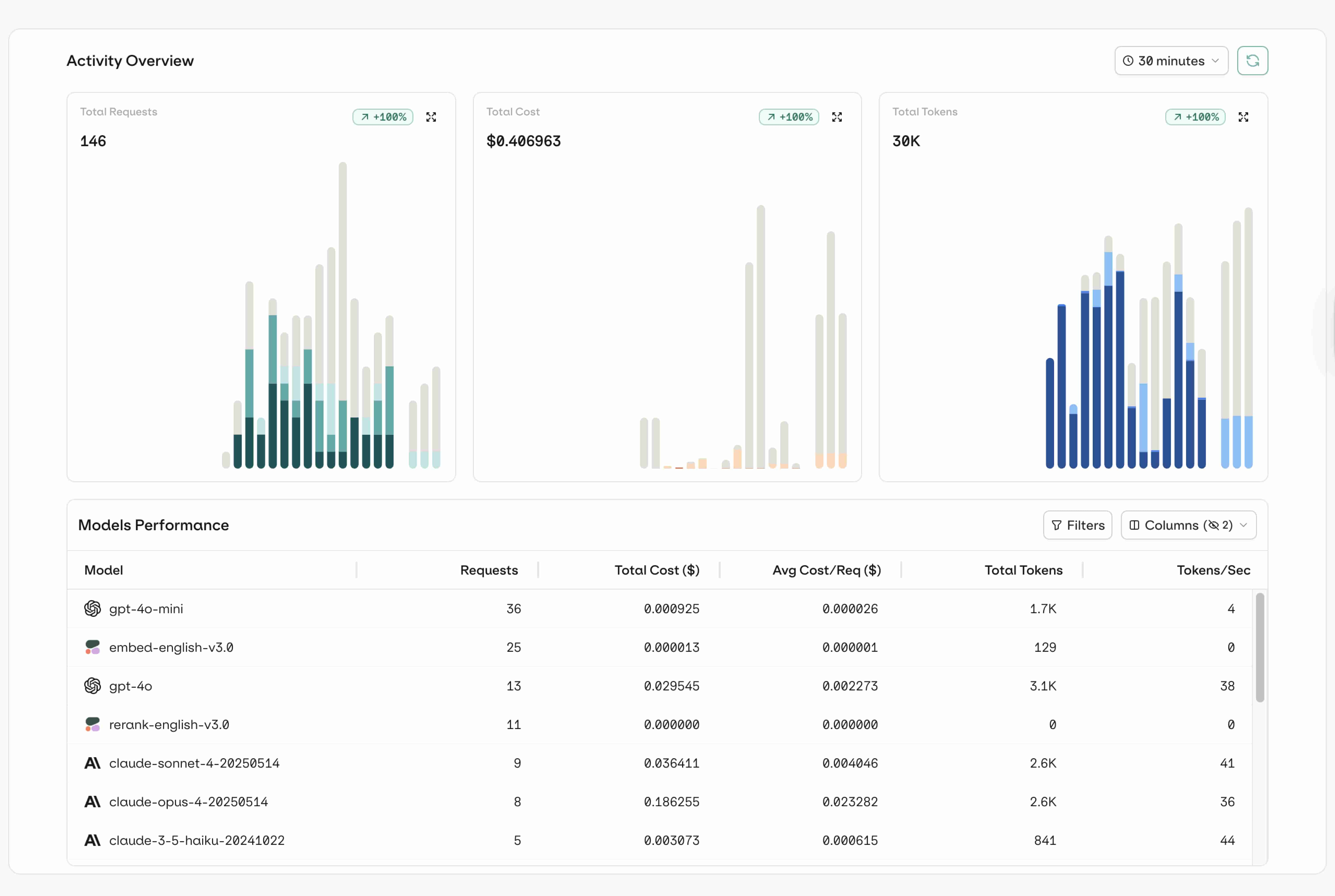

Budget control

Dashboard

Analytics

identity tracking

Stay in control

Track tokens and costs in real time, set limits, and optimize spend across models as usage scales – without surprises.

observability

tracing

Span

Threads

Debugging

See everything

Apply guardrails once and see every request end to end. Validate outputs, handle sensitive data, and debug issues from a single place.

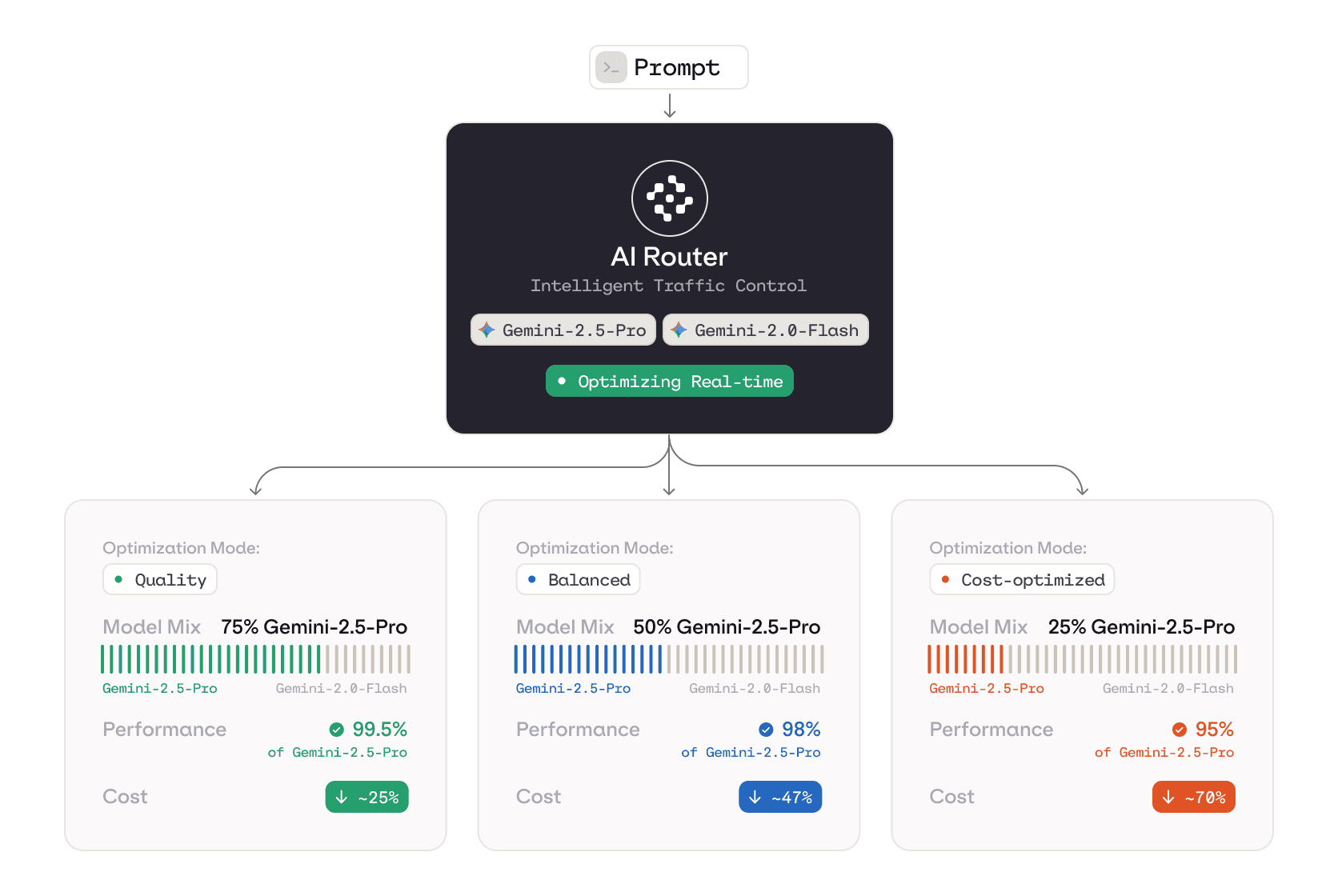

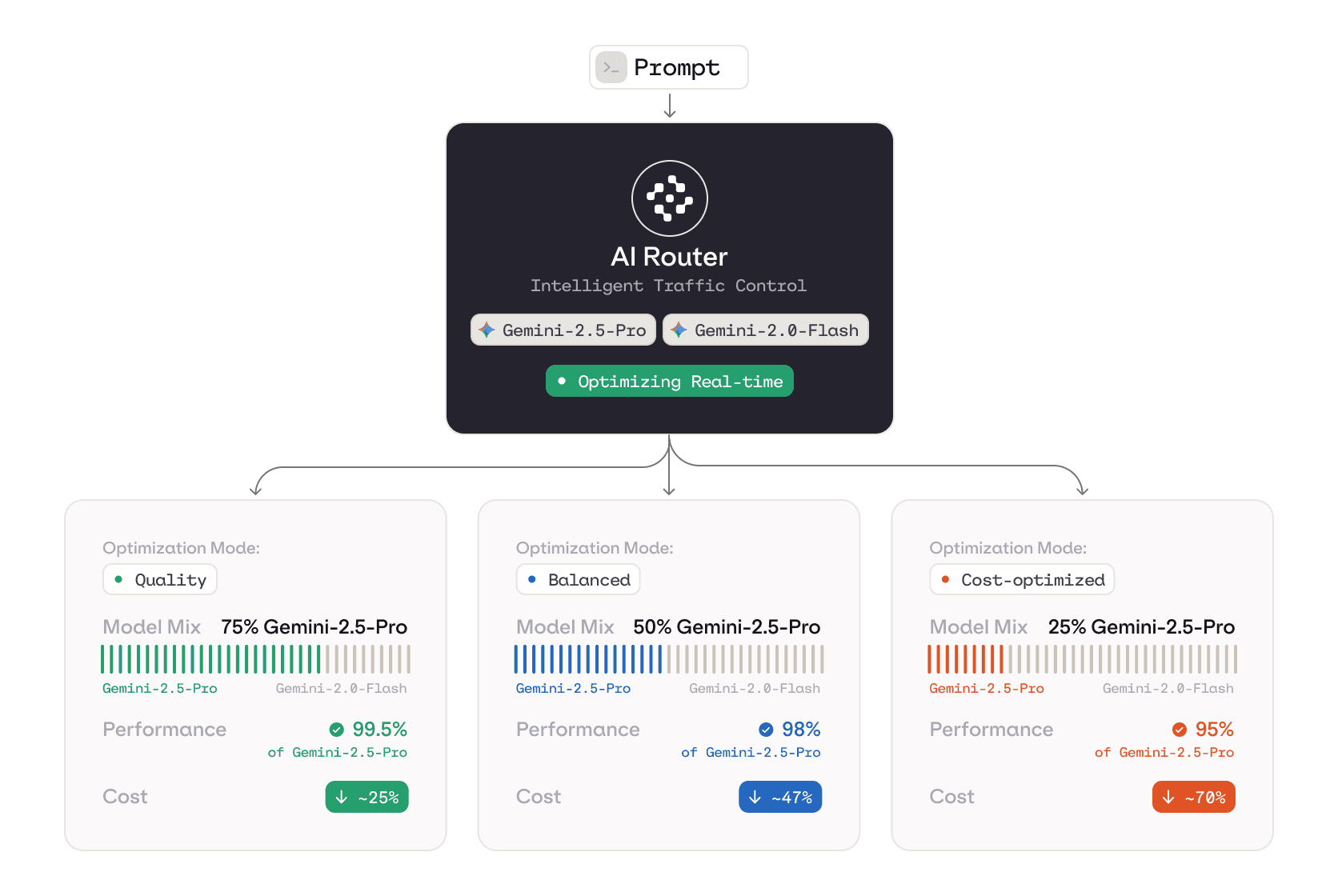

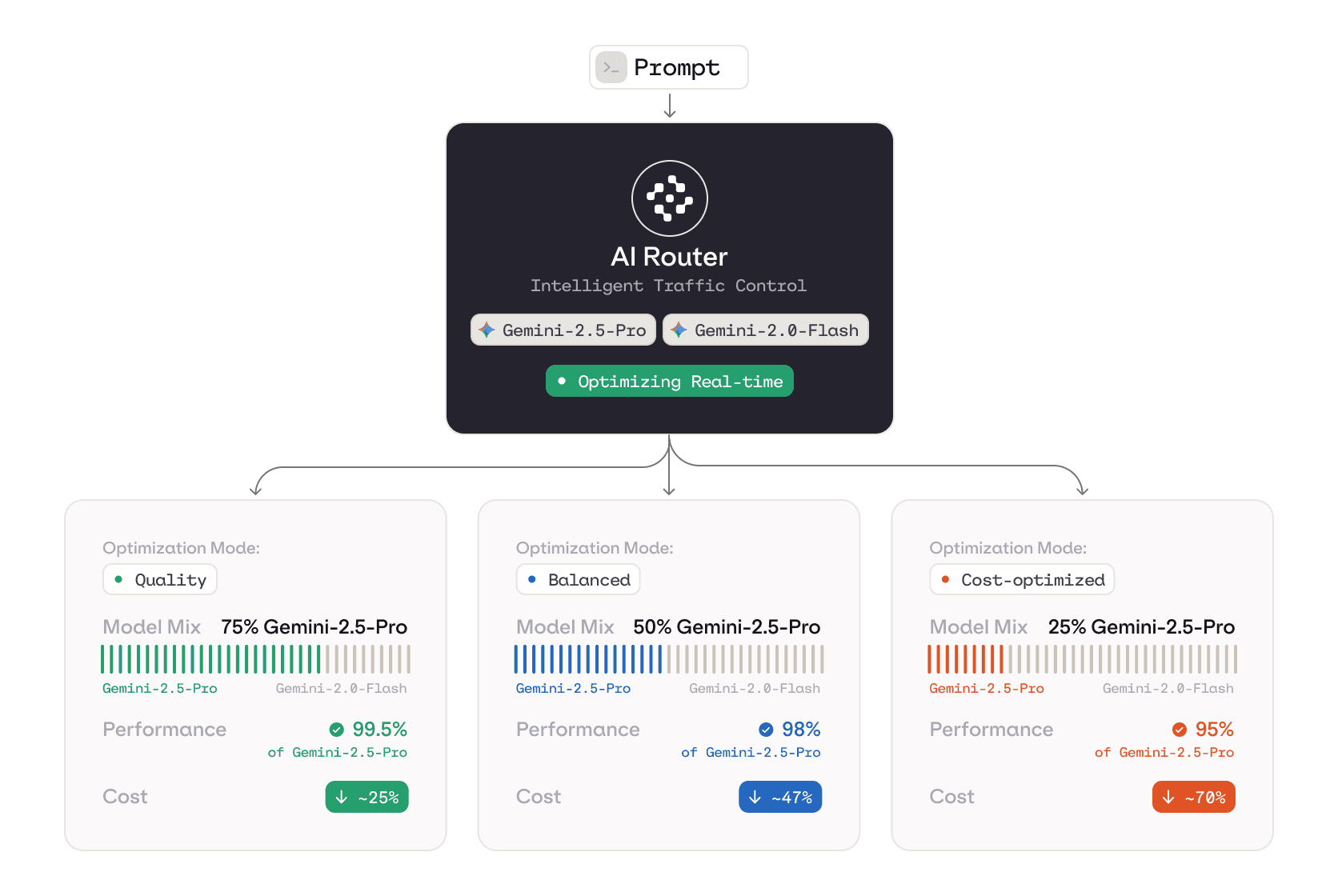

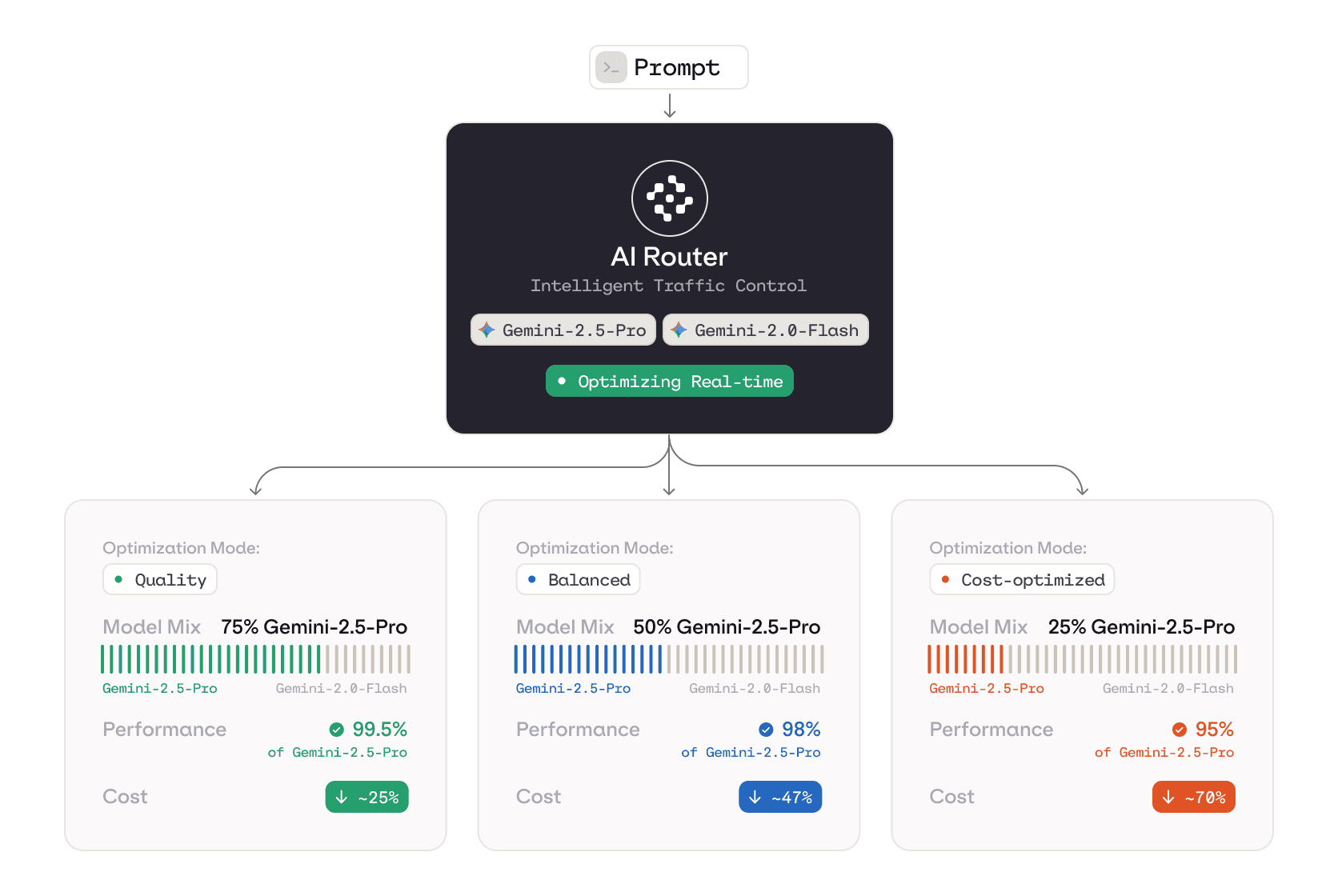

Intelligent LLM Routing

Cut LLM costs by 50% from day one

Intelligent LLM Routing

Cut LLM costs by 50% from day one

Intelligent LLM Routing

Cut LLM costs by 50% from day one

Smart router

Immediate savings without compromising quality

Orq.ai’s smart routing dynamically selects the right model for every request so simple tasks don’t burn frontier-model budgets. Instead of sending everything to your most expensive LLM “just to be safe,” the router analyzes each prompt and routes it to the most cost-effective model that still meets quality requirements.

Smart router

Immediate savings without compromising quality

Orq.ai’s smart routing dynamically selects the right model for every request so simple tasks don’t burn frontier-model budgets. Instead of sending everything to your most expensive LLM “just to be safe,” the router analyzes each prompt and routes it to the most cost-effective model that still meets quality requirements.

Smart router

Immediate savings without compromising quality

Orq.ai’s smart routing dynamically selects the right model for every request so simple tasks don’t burn frontier-model budgets. Instead of sending everything to your most expensive LLM “just to be safe,” the router analyzes each prompt and routes it to the most cost-effective model that still meets quality requirements.

Smart router

Immediate savings without compromising quality

Orq.ai’s smart routing dynamically selects the right model for every request so simple tasks don’t burn frontier-model budgets. Instead of sending everything to your most expensive LLM “just to be safe,” the router analyzes each prompt and routes it to the most cost-effective model that still meets quality requirements.

Real time decisions

Cost Optimized

How it works

How it works

How it works

1. Sign up

Create your Orq.ai account and get instant access to the AI Router.

1. Sign up

Create your Orq.ai account and get instant access to the AI Router.

1. Sign up

Create your Orq.ai account and get instant access to the AI Router.

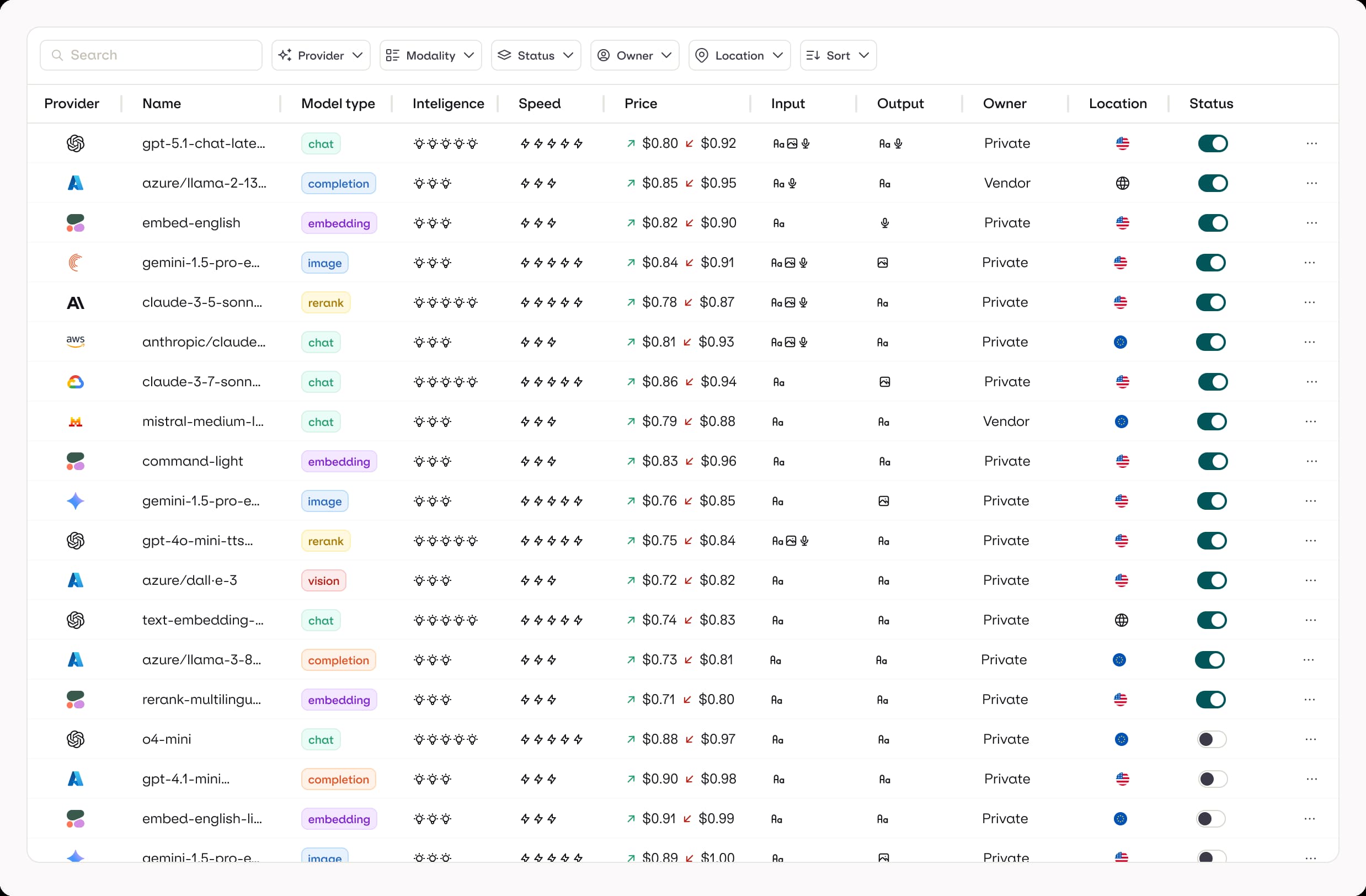

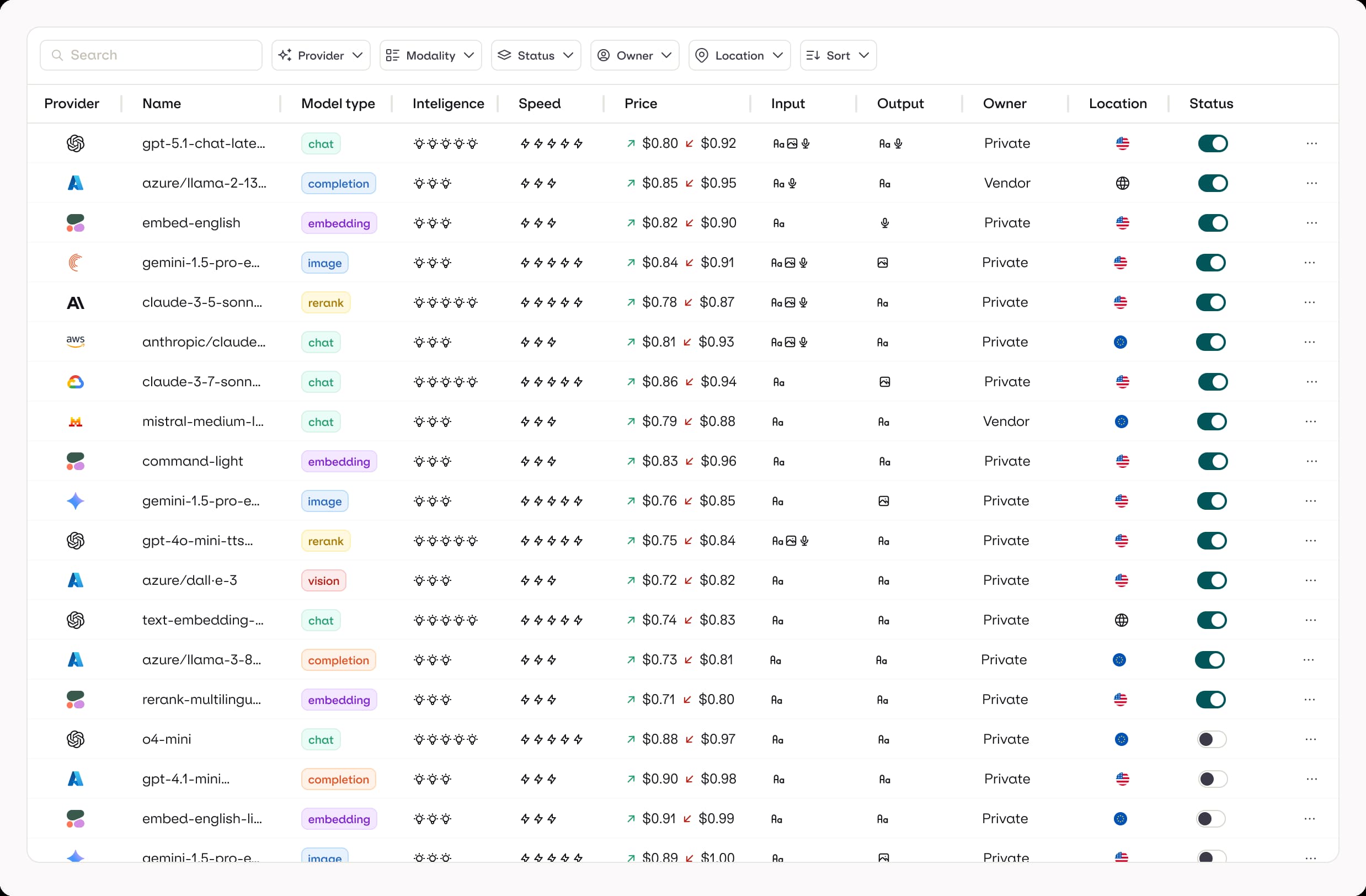

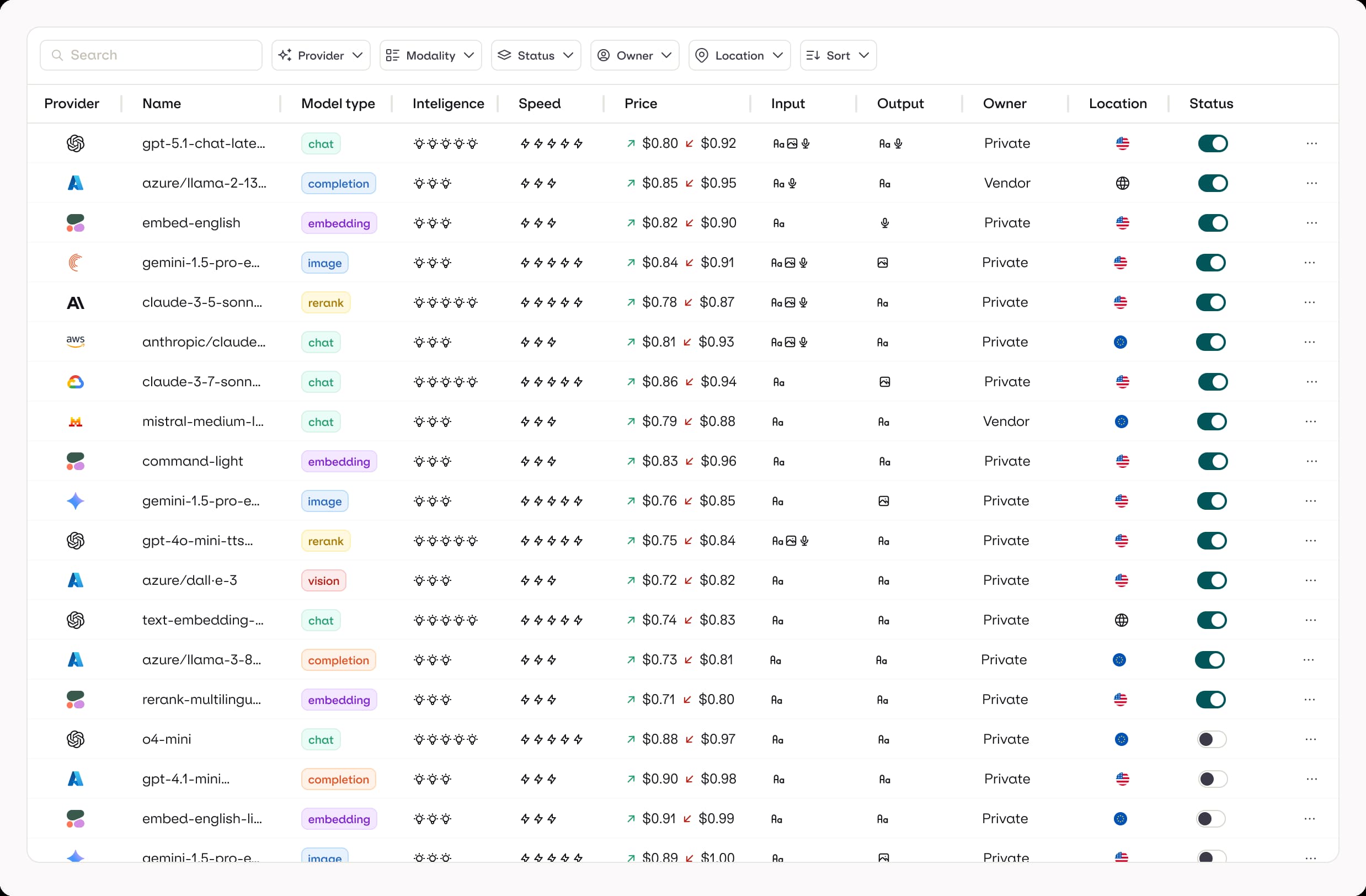

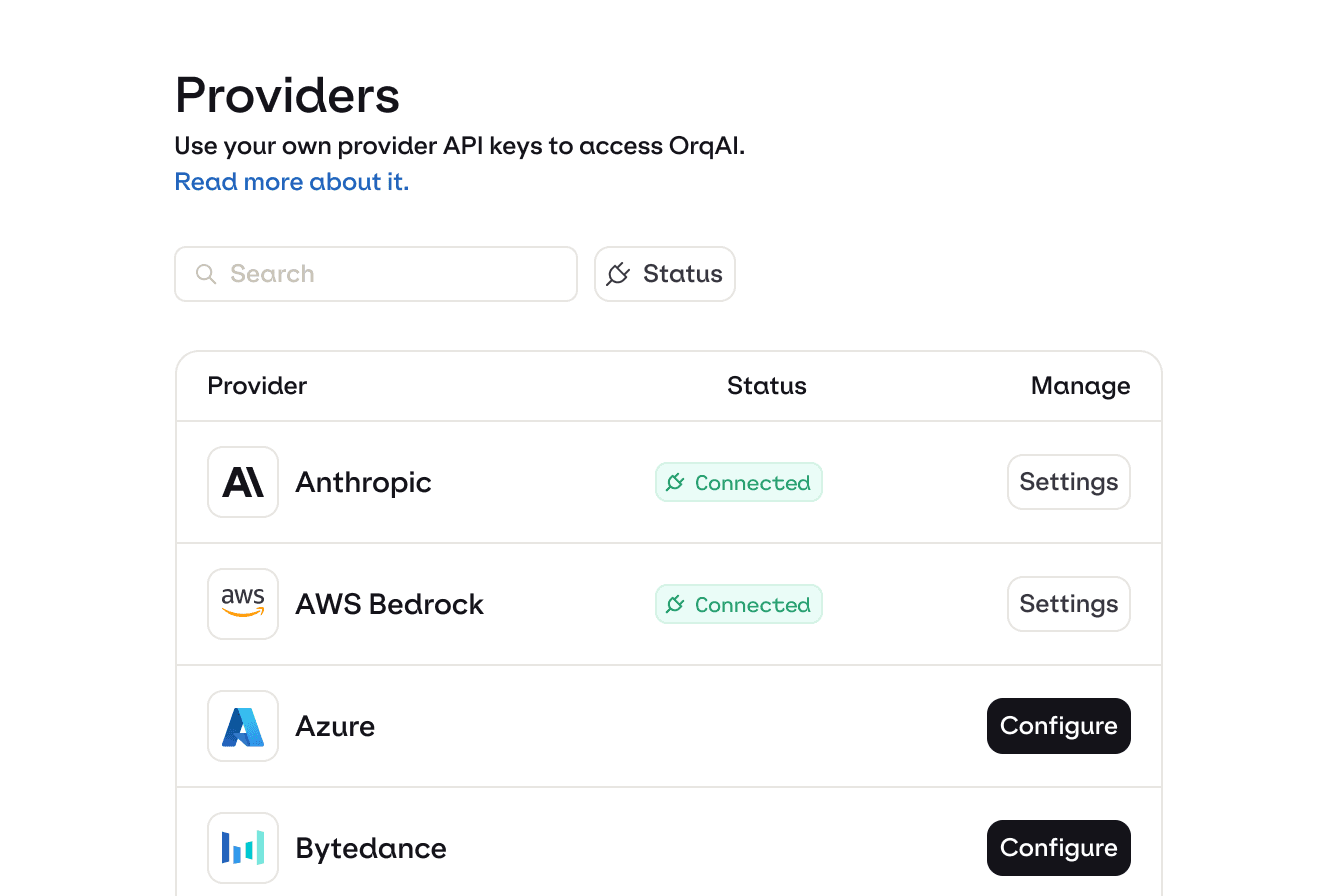

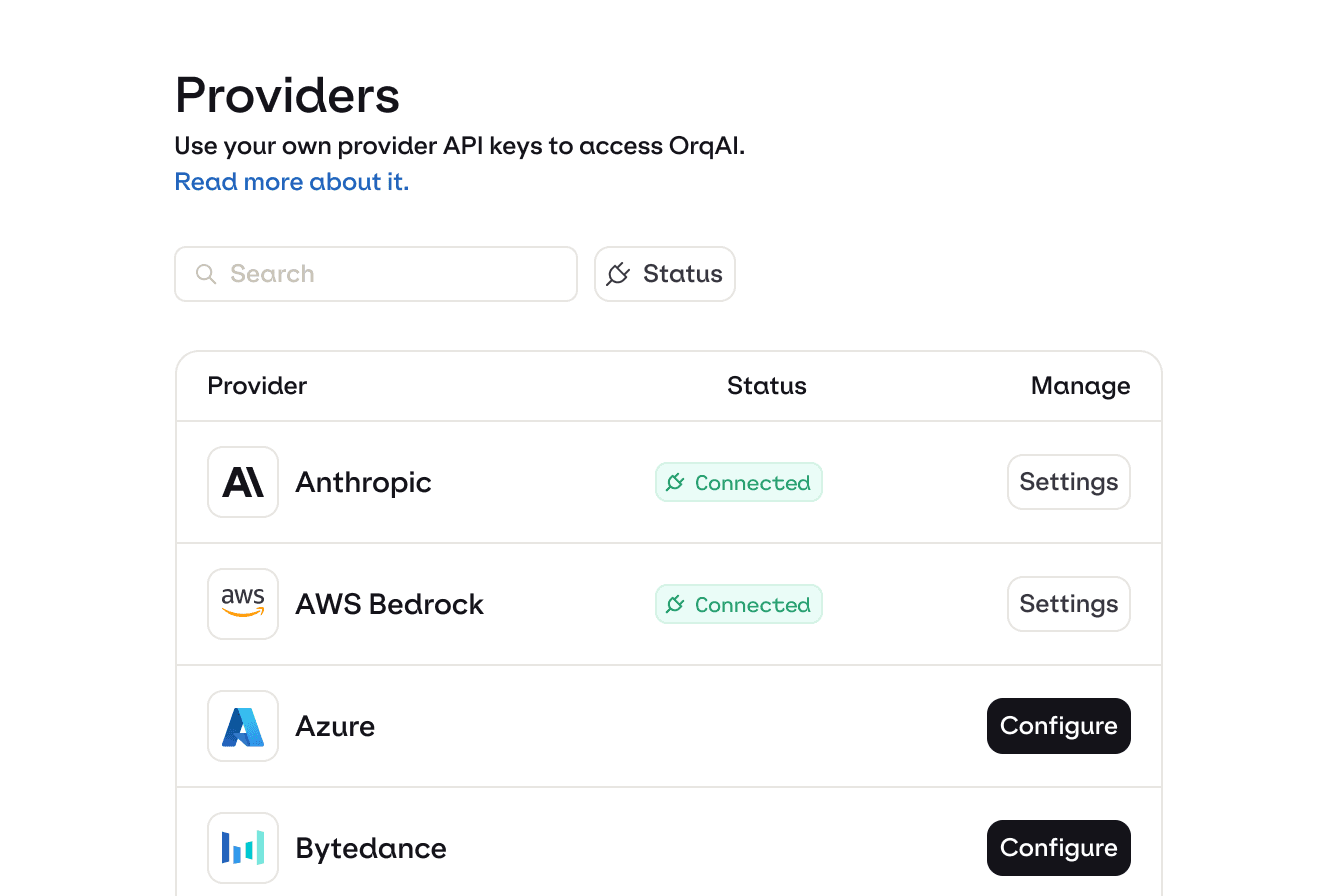

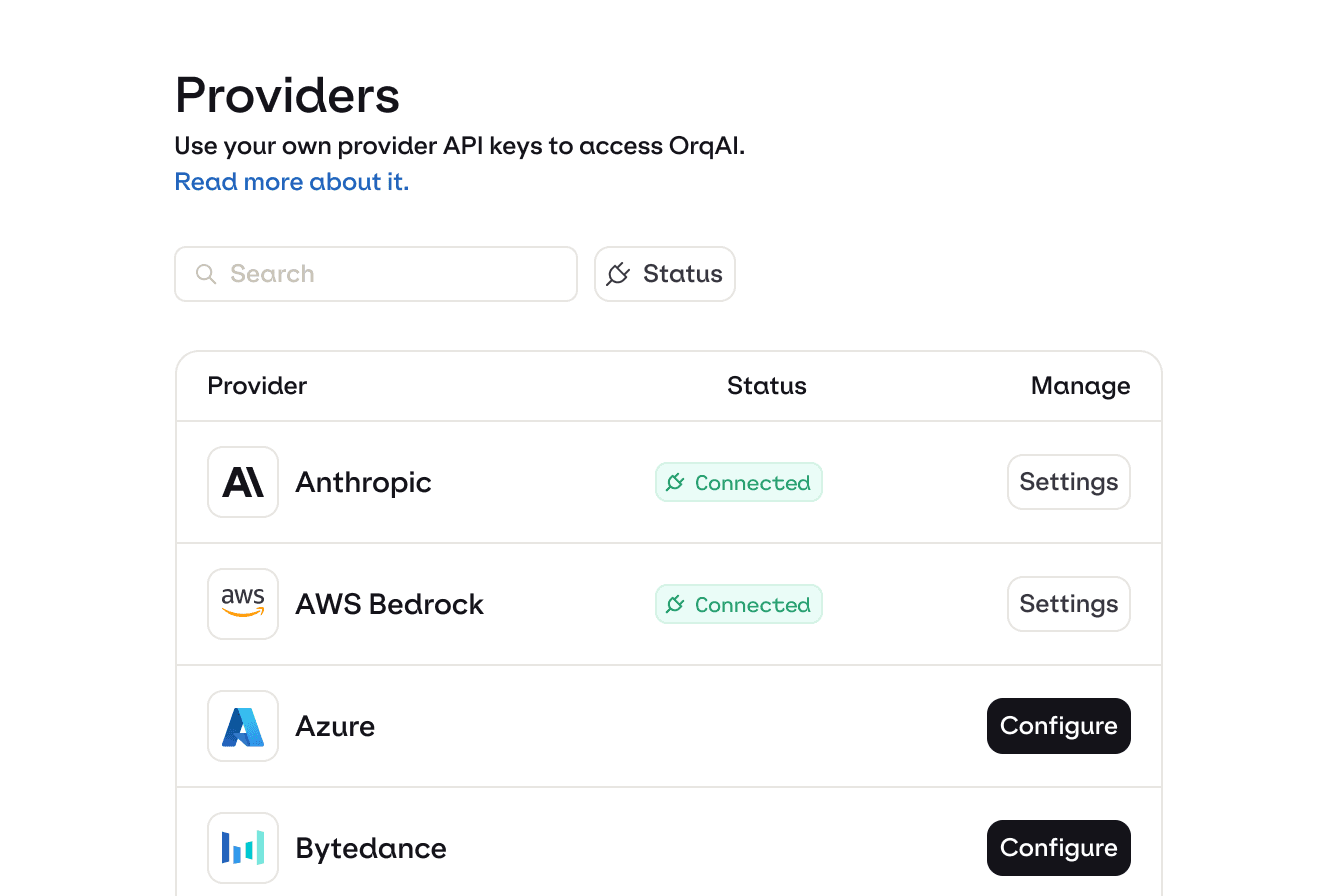

2. Enable your models

Connect and configure the models and providers you want to route across.

2. Enable your models

Connect and configure the models and providers you want to route across.

2. Enable your models

Connect and configure the models and providers you want to route across.

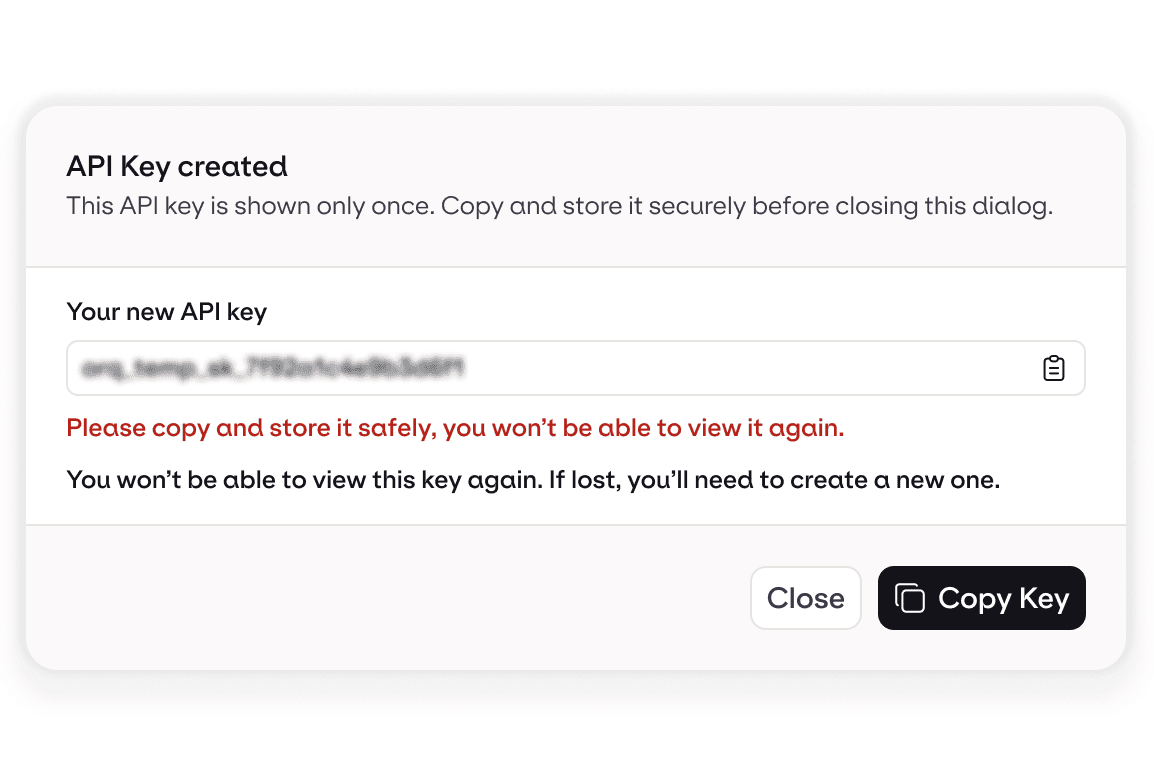

3. Get your API key

Start sending AI traffic through a single, production-ready endpoint.

3. Get your API key

Start sending AI traffic through a single, production-ready endpoint.

3. Get your API key

Start sending AI traffic through a single, production-ready endpoint.

Featured Models

Featured Models

Featured Models

anthropic

chat

claude-3-7-sonnet-latest

Anthropic most intelligent model to date and the first hybrid reasoning model on the market.

Input

$3.00

/M tokens

Output

$15.00

/M tokens

mistral

chat

mistral-large-2512

Flagship open-weight multimodal model with 41B active parameters and 675B total parameters. Our top-tier reasoning model for high-complexity tasks.

Input

$0.50

/M tokens

Output

$1.50

/M tokens

openai

chat

gpt-5-chat-latest

GPT-5 Chat points to the GPT-5 snapshot currently used in ChatGPT. We recommend GPT-5 for most API usage, but feel free to use this GPT-5 Chat model to test our latest improvements for chat use cases.

Input

$1.25

/M tokens

Output

$10.00

/M tokens

Who it’s for

Who it’s for

Who it’s for

Engineering teams

Experiment, compare, and switch between LLMs without hard-coding providers or rewriting logic.

Engineering teams

Experiment, compare, and switch between LLMs without hard-coding providers or rewriting logic.

Engineering teams

Experiment, compare, and switch between LLMs without hard-coding providers or rewriting logic.

Product teams

Ship AI features to production while keeping cost, performance, and reliability in check at scale.

Product teams

Ship AI features to production while keeping cost, performance, and reliability in check at scale.

Product teams

Ship AI features to production while keeping cost, performance, and reliability in check at scale.

Platform teams

Standardize LLM access, enforce guardrails, and give teams one approved AI entry point.

Platform teams

Standardize LLM access, enforce guardrails, and give teams one approved AI entry point.

Platform teams

Standardize LLM access, enforce guardrails, and give teams one approved AI entry point.